I Played Flappy Bird in ChatGPT... Not Good!

GPT-4 is fantastic, but is it good enough to be a Game Engine? I tried this with Flappy Bird, using a simple LangChain program and some Prompt Engineering.

We all know Flappy Bird, the simple game of a bird avoiding pipes (the bird is called Faby if you didn’t know :D). It’s a good practice project for beginner programmers. After seeing Code Bullet fail to use ChatGPT as a game engine for Flappy Bird, I was determined to take up the challenge! It’s a cool video by the way.

what does it mean to use ChatGPT as a Game Engine?

If we had to make our own Flappy Bird, say, in Python, we had to code all the game logic, the rules, the gravity of the bird falling, the action of the bird flying up, keeping the score, making the pipes move left and generating new ones, and of course tackle with a handful of unexpected bugs that is the essence of programming.

But here, I’m not doing ANY of that. I want ChatGPT to create the state of the Flappy Bird game step by step. I don’t want to code any of the game rules and mechanics, but I want to explain them to ChatGPT and have it xgenerate the game frame by frame.

Let’s Build it!

As you can check out in the above outline, I formulate my prompt using two ingredients:

[current state of the game] + [user command]

For the purpose of communicating with ChatGPT, I’m using LangChain. It’s a very powerful tool when it comes to AI chatbots but I am only using a pinprick of its power.

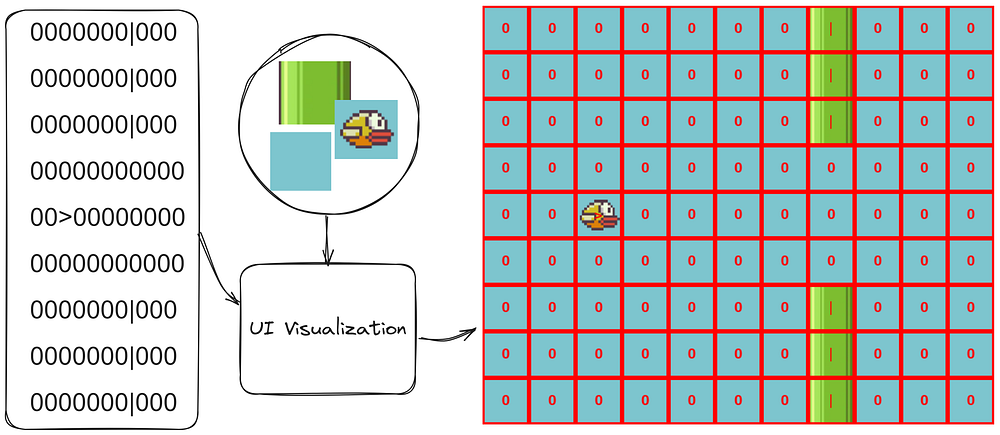

To represent each frame of Flappy Bird, I am encoding it in text format using > for bird, | for pipe and 0 for the empty space. Hopefully, by keeping the game state simple and using only a few characters, we won’t confuse ChatGPT. Here’s an image that shows how we see the game vs. how ChatGPT perceives it:

In Flappy Bird, you can either JUMP (or go UP), or you can do nothing (show the NEXT frame). So, for the user commands, we have either UP or NEXT.

To begin chatting with ChatGPT using LangChain, you must put your OPENAI_API_KEY in a .env file. This API key is needed to work with ChatGPT (how to get API key). Next, we set up the LLM module, specifying gpt-4-turbo as our model.

from langchain.prompts import (

ChatPromptTemplate,

FewShotChatMessagePromptTemplate,

)

from langchain.chat_models import ChatOpenAI

from dotenv import load_dotenv

from game_prompts import *

load_dotenv(dotenv_path=".env")

llm = ChatOpenAI(model="gpt-4-turbo", temperature=0.1)Choose Your Words Carefully!

The success of running Flappy Bird with ChatGPT (or almost any other task when it comes to LLMs) comes down to only two things:

How powerful the LLM is.

How well can we squeeze what we need from the LLM?

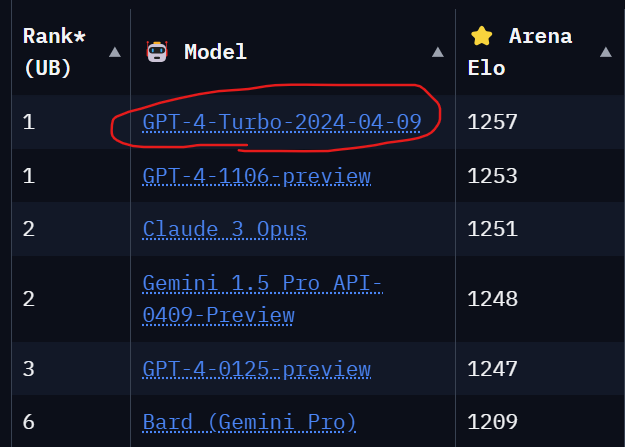

There is no other factor here that affects the results. In this experiment, I am using GPT-4-turbo as my Language Model, which is the most powerful LLM to date — May 2024.

Though we cannot control the inner workings of GPT-4-Turbo (unless we’re Altman) and make it more powerful, we can try to write better prompts to help ChatGPT realize the logic of the game we want and to give us accurate outputs.

This practice is called Prompt Engineering. It’s a fancy name for “choose your words carefully so the model can understand you better.” Simple techniques and methods in writing your prompts can drastically change the quality of your outputs.

Setting Up LangChain

You can check out the repository of this project at the end of the article and run it for yourself. I will just explain some parts of LangChain and how I explain the rules of Flappy Bird for ChatGPT. Having noted the importance of prompting, I use this system prompt to instruct ChatGPT:

SYSTEM_PROMPT = "You should help simulate the game of Flappy Bird,\

where there is a bird and it should \

avoid the pipes or the bird would die. \

At each prompt i will give you the current \

state of the game along with a command\

and you need to generate the next state of the game,\

showing the bird with '>' and the pipes wih '|' and empty spaces with '0'.\

Only if the bird hits a pipe ('>' and '|' collide),\

return 'DEAD'. Otherwise, generate the next frame.\

If the user prompts you 'UP' the bird\

must go up 2 units, and if the user prompts 'NEXT',\

it means they don't have a specific command,\

and you should generate the next frame with\

the bird going down 1 unit because of gravity.\

The bird doesn't left or right,\

but only up and down. Only the pipes move to the left each time. to recap,\

you will only receive 2 kinds of commands:\

'UP', 'NEXT'. You will receive them coupled with the current state of the\

game that you can use to generate the next state.\

Sometimes you also generate a new set of pipes \

coming from the rightmost part."It is a good practice to keep your prompts and your code separate. I keep my prompts in a different .py file than my code, but you can use .json or any file of your choice.

Next, we will use Few-Shot Prompting to give ChatGPT examples of what we will prompt it and what we desire to receive as output. I keep these example states ( INIT_STATE , INIT_UP_STATE , etc.) in the same prompts.py file as I keep my SYSTEM_PROMPT, so you can check them out in the repository.

examples = [

{"input": f"COMMAND: UP \n, current state of the game: \n {INIT_STATE}", "output": {INIT_UP_STATE}},

{"input": f"COMMAND: NEXT \n, current state of the game: \n {INIT_UP_STATE}", "output": {INIT_UP_NEXT_STATE}},

{"input": f"COMMAND: UP \n , current state of the game: \n {SAMPLE_STATE_1}", "output": {SAMPLE_STATE_1_OUTPUT_UP}},

{"input": f"COMMAND: UP \n , current state of the game: \n {SAMPLE_STATE_2}", "output": {SAMPLE_STATE_2}}

]

example_prompt = ChatPromptTemplate.from_messages(

[

("human", "{input}"),

("ai", "{output}"),

]

)

few_shot_prompt = FewShotChatMessagePromptTemplate(

example_prompt=example_prompt,

examples=examples,

)The next step in setting up our chain is making the template of our prompt which consists of system prompt, few-shot examples, and the format of the user prompts we will send to the model. We also create our Chain. The Chain in LangChain can be explained as a series of connected modules, including prompt template, model, parsers, etc. In our example, we are keeping it minimal with only two modules: the promptand thellm.

prompt = ChatPromptTemplate.from_messages(

[

("system", SYSTEM_PROMPT),

few_shot_prompt,

("human", "COMMAND: {command} \n, current state of the game: \n {cur_state}")

]

)

prompt_and_model = prompt | llm #the chainLangChain can feel overwhelming as many classes and methods may look alike. From experience, seeing LangChain work in bigger and more complex projects can help you distinguish the functionality of each module.

Finally, we need a function to call ChatGPT and get an output (that is the whole point :D). This is done using the invoke function of LangChain.

output = prompt_and_model.invoke({

"command": user_command,

"cur_state":cur_state})Next remains visualizing the game which I leave for you to check out in the repo.

How Did It Turn Out?

Not according to my expectations!

The image above shows the first 9 game states generated by ChatGPT-4. As you can see, it doesn’t make much sense:

The AI completely ignores the rules and mechanics of the game. In step 3 you can see the pipe is broken!

GPT fails to detect the states where the bird hits the pipe and dies.

Game states are not consistent, you can see that from step 8 to step 9, the bird moves backward!

This was pretty surprising for me. Correctly generating Flappy Bird game states requires understanding and manipulating structured text or graphical representations within text-based constraints. It’s a simple challenge of reasoning capabilities. My expectation was that using an elaborate system prompt + few-shot example would be enough for a mammoth model such as GPT-4 to generate consistent and logical Flappy Bird game states.

Testing out with GPT-3.5-turbo wasn’t much different either. Finally, to confirm that my few-shot examples are doing more good than harm, I commented them out, and the results were even worse, so I am keeping them for now.

— Can YOU 🫵 Do It? —

Aside from the model’s capabilities, this challenge boils down to Prompt Engineering. Could it be that with a better prompt, we can have GPT-4 play us a simple game of Flappy Bird?

You can test out your prompts and see if you can have the bird fly! Please make sure to start the project ⭐ if you check it out.

GitHub - hesamsheikh/flappy-gpt: GPT as Game Engine to Run Flappy Bird

GPT as Game Engine to Run Flappy Bird. Contribute to hesamsheikh/flappy-gpt development by creating an account on…github.com

Some things to improve:

Better System Prompt: I have tried to come up with a system prompt that explains the goal, how to better encode the game in a text-based format, and the rules of the game. Could it be that by coming up with a better System Prompt we can make the game rules sound simpler and easier for GPT to follow?

More Few-Shot Examples: In my few-shot examples, I have used 4 game states resulting from a previous game state + user command. Could using more game states, say 20, help GPT get the gist of the task?

Eliminating “DEAD” Scenario: Currently, I have included a “DEAD” game state where the Bird hits the pipe and dies. Simplifying the game and eliminating the logic for the bird dying can make the system prompt shorter, and possibly make GPT focus on creating the regular states more consistent.

Perhaps the probabilistic nature of these models doesn’t allow logical and reasonable tasks such as playing a game by the rules. I don’t like to believe that. Furthermore, by fine-tuning a model such as LLama 3 on a dataset generated by playing the game, we can take this challenge to the next level.

🌟 Join +1000 people learning about

Python🐍, ML/MLOps/AI🤖, Data Science📈, and LLM 🗯

follow me and check out my X/Twitter, where I keep you updated Daily:

https://twitter.com/itsHesamSheikh

Thanks for reading,

— Hesam